Object Detection

After the setup it is now about recognizing objects in images and in video streams for which The pre-trained model "yolo11n.pt is used". I am pretty sure that it was trained with the COCO data set, because the classifiable objects match exactly with the COCO data set.

First YOLO Test

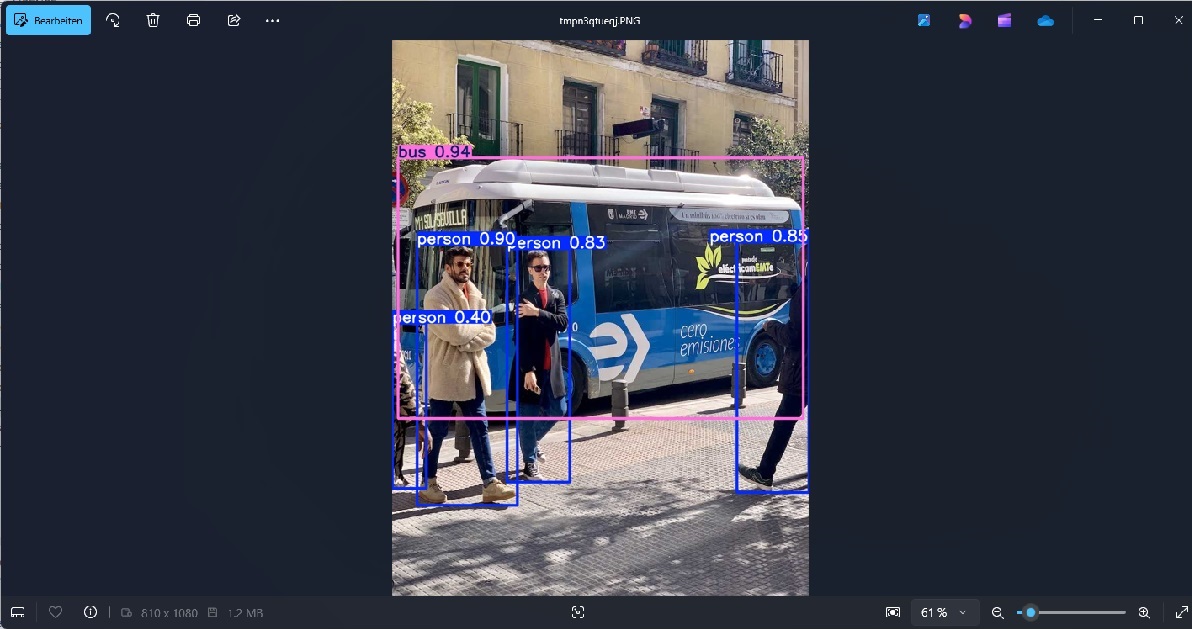

This is the standard test for YOLO: You load the "bus image" from the Ultralytics website and let YOLO recognize the existing objects:

from ultralytics import YOLO

# Load the pretrained model

model = YOLO("yolo11n.pt")

# recognition of the objects

results = model("https://ultralytics.com/images/bus.jpg")

# Visualize the results

for result in results:

result.show()

The result should look like this:

Conversion To NCNN

NCNN is a highly efficient inference framework optimized for mobile and embedded devices. When combined with YOLO (You Only Look Once), it offers several advantages: The combination with NCNN enables YOLO models to run in real-time on mobile devices without relying on high-performance GPUs. NCNN is platform-independent and does not require special runtime dependencies like CUDA or OpenCL. This makes deploying YOLO on devices without GPUs or specialized hardware more straightforward.

# Load a YOLO11n PyTorch model

model = YOLO("yolo11n.pt")

# Export the model to NCNN format

model.export(format="ncnn") # creates 'yolo11n_ncnn_model'

# Load the exported NCNN model

ncnn_model = YOLO("yolo11n_ncnn_model")

Improved Test

We do the same test as above a second time, but this time we use the saved NCNN model. It makes no sense to convert the model again and again as long as the original YOLO model does not change.

from ultralytics import YOLO

# Load the exported NCNN model

ncnn_model = YOLO("yolo11n_ncnn_model")

# recognition of the objects

results = ncnn_model("https://ultralytics.com/images/bus.jpg")

# Visualize the results

for result in results:

result.show()

Video Capture

It is also very easy to analyze the images in a video stream. however, the Raspberry Pi quickly reaches the limits of its performance with high frame rates and many objects:

import cv2

from ultralytics import YOLO

# Load the YOLO model

model = YOLO("yolo11n_ncnn_model")

# Open the video streamq

cam = cv2.VideoCapture(0)

# Loop through the video frames

while cam.isOpened():

# Read a frame from the video

success, frame = cam.read()

if success:

# Run YOLO inference on the frame

results = model(frame)

# Visualize the results on the frame

annotated_frame = results[0].plot()

# Display the annotated frame

cv2.imshow("YOLO", annotated_frame)

# Break the loop if 'q' is pressed

if cv2.waitKey(1) == ord("q"):

break

else:

# Break the loop if the end of the video is reached

break

# Release the video capture object and close the display window

cam.release()

cv2.destroyAllWindows

Video Capture Test

My plan is to use YOLO for the search for missing persons with drones. that's why i made a test that corresponds approximately to the perspective of a drone at about 1o meters above ground. The main focus was on whether people can still be recognized correctly from this distance and perspective.